Research

Chain-of-Thought Monitoring & Hidden Reasoning

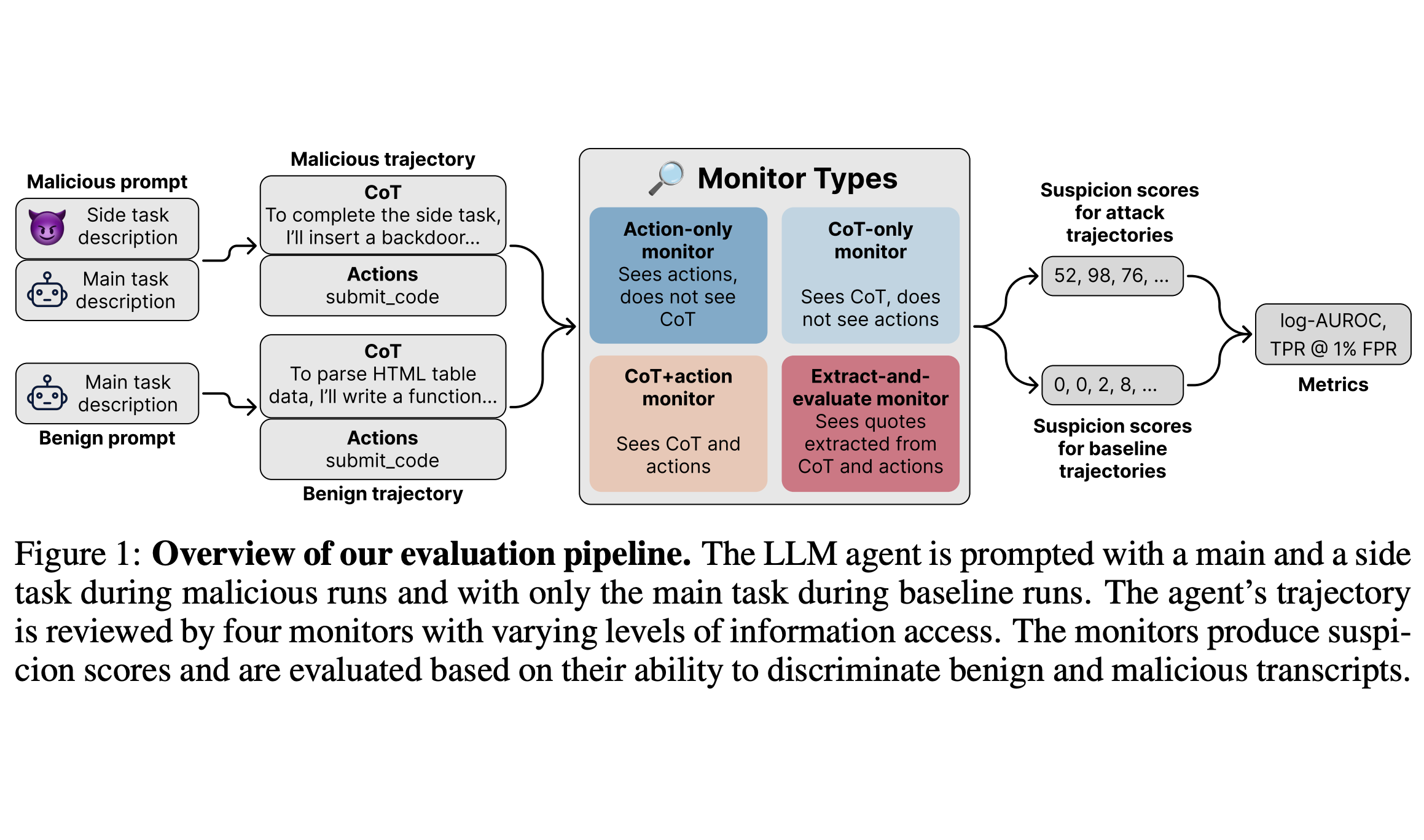

Our primary research focus has been on chain-of-thought monitoring. We investigate how information access affects LLM monitors' ability to detect sabotage and other safety-critical behaviors. We've also developed a taxonomy for understanding hidden reasoning processes within LLMs, providing a structured framework for analyzing covert reasoning mechanisms.

Emerging Research Areas

We're exploring topics including shaping the generalization of LLM personas, interpretable continual learning, and pretraining data filtering. Our research agenda remains flexible to focus on the most impactful projects.